Human-AI interactions support explainable AI

Because machine learning models are opaque, explainable AI (XAI) should reveal how and why machine learning models work.

Source: Figure 2. XAI Concept - New machine-learning systems will have the ability to explain their rationale, characterize their strengths and weaknesses, and convey an understanding of how they will behave in the future.

With XAI, a human-in-the-loop cultivates symbiosis between human and AI agents which leads to:

What human-computer interface techniques might translate machine learning models into explanations for humans?

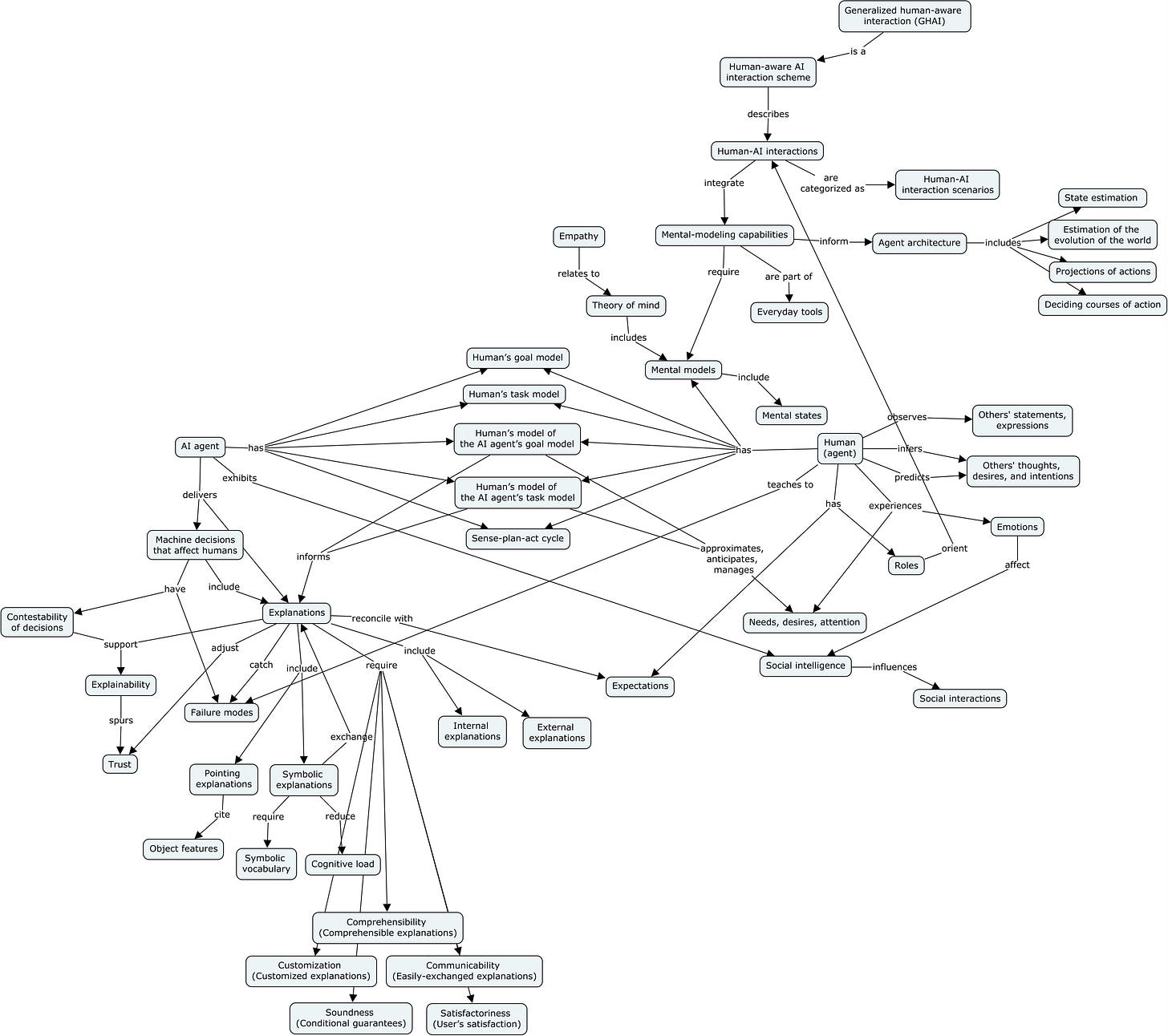

I benefited from studying research published by Sarath Sreedharan, Anagha Kulkarni, and Subbarao Kambhampati1. They assert that AI agents need to go beyond planning and models of the world and consider mental models2, a concept from the theory of mind.

The following graphs attempt to describe a portion of human-aware AI interactions. Although the capabilities are not yet realized, the propositions are situated in the present tense.

Please share your feedback and make use of this material in accordance with the Creative Commons license.3